Listen to this article

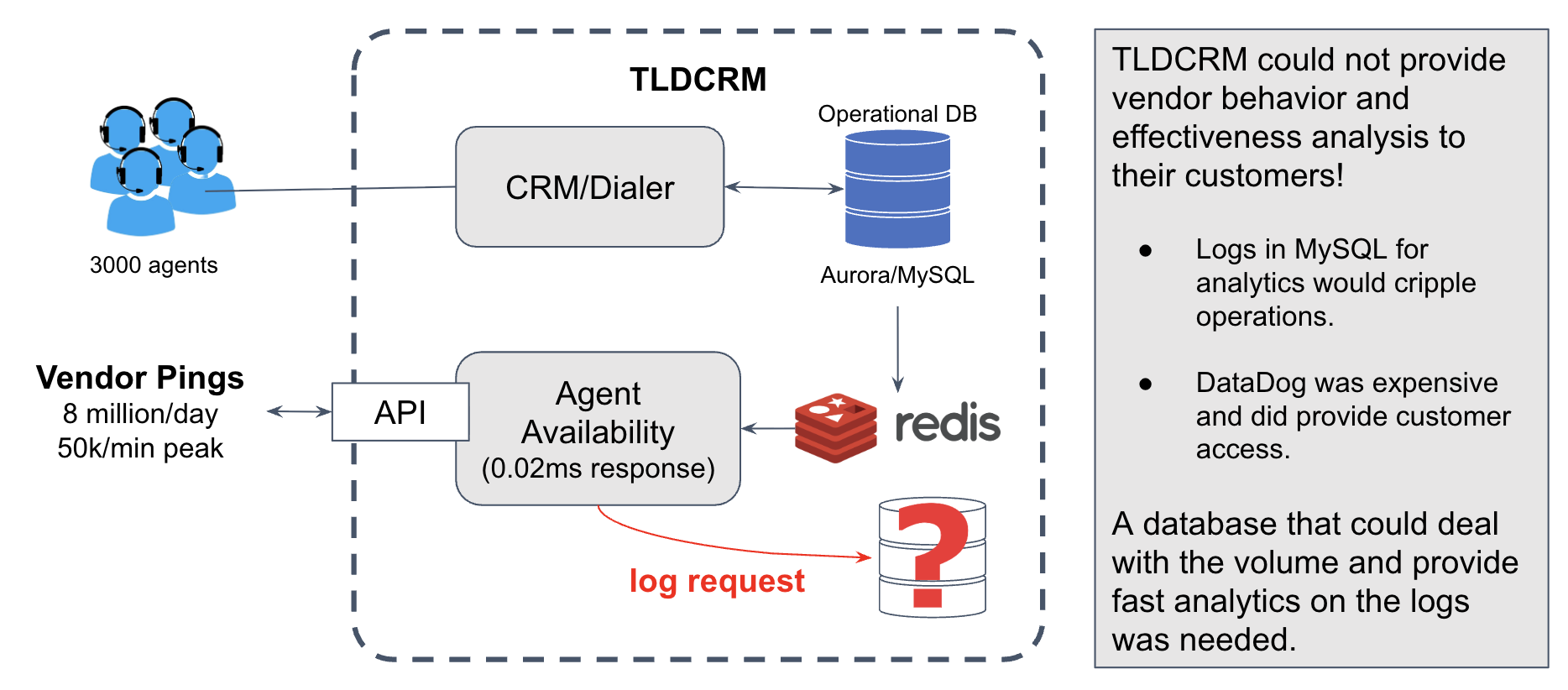

TL;DR: TLDCRM spent two years asking "where do I put my logs?". Their insurance call center platform processes 8 million API requests daily. Analytics on this data would give their customers insights on vendor behavior. But they couldn't make request log analytics available to their customers. Using the operational DB for this would cripple production. Datadog costs were high and didn't enable analytic access to TLDCRM’s customers. Data lakes were too complex. We caught up with Alexander Conroy, CEO and lead developer of TLDCRM and he walked us through the solution they found with Firebolt.

Alexander Conroy is the CEO and lead developer of TLDCRM, a rare combination in the SaaS world. He built the company's CRM and dialer platform from the ground up, and he still writes the code for critical infrastructure features. When we spoke with him, he'd just finished implementing a solution to a problem that had haunted him for over two years.

We wanted to understand: What makes a data logging problem so hard that even an experienced technical CEO can't solve it for 24 months?

Overview

Firebolt: Let's start with the basics. What does TLDCRM do?

Alexander: We're a SaaS platform that combines a CRM and predictive dialer specifically built for insurance call centers. Think health insurance, Medicare, life insurance, final expense - anything that can be sold at a premium. Our customers range from small agencies with 15 agents to massive call centers with 800+ seats.

The key differentiator is that we're all-in-one. Most of our competitors require you to buy a CRM separately, then buy a dialer, then hire consultants to integrate them. With us, it's one platform, one monthly fee, real-time support included. We even track policies and manage commissions, which most dialers don't do.

We have over 330 customers right now, and we're running on over 330 EC2 servers to support them - each account gets its own dedicated dialer infrastructure.

So what's this "ping" data we keep hearing about?

Alexander: [laughs] Yeah, the ping problem. This is what broke everything.

Here's how insurance lead generation works: Third-party vendors run ads on Facebook, TV, wherever. When someone says "I want a quote," that vendor needs to route the call to a buyer - one of our customers. But before they transfer the live caller, they ping us to check: Is an agent available? Do you accept calls from this area code? Have you already bought this lead?

We have to respond in under 20 milliseconds. Our system actually responds in 0.02 milliseconds because we cache everything in Redis. But the volume is insane.

Challenges

How insane?

Alexander: Eight million pings per day across all our customers. At peak times, that's over 50,000 API requests per minute. We have 29 ECS servers dedicated just to handling this traffic. It's over half of our entire platform load.

Each ping has metadata we need: vendor ID, timestamp, phone number - which we redact to just the area code for compliance - agent availability, accept or reject decision, response time. All critical data for optimizing call center operations through analytics.

And you couldn't store this data. Why not?

Alexander: [sighs] That's the question I've been asking for over two years. "Where do I put my logs?"

The obvious answer is MySQL, right? Our operational data is already in Aurora RDS. But I already knew it would cripple RDS. We'd be doing 50,000 inserts per minute. Searching through them even with indexes is very slow. It lags quite a bit. And the more indexes you add, the longer it takes to insert.

Plus, log queries would compete with our operational queries. When you have 3000 concurrent human agents on calls and the database is slow, that's a disaster. People are losing money every second the dialer is down.

So you tried other solutions?

Alexander: Oh yeah. I tried everything.

We started with Datadog because they sold us on this idea that we could use their system for customer-facing analytics. However, it turned out that this was not the case and we ended up paying $20,000 a month - almost $207,000 a year - for features we couldn't actually use.

The problem is, I can't just plug into Datadog on their query side because of rate limits. Things like Datadog aren't meant for client access. They're meant for the infrastructure owner's access.

We eventually reduced our bill down to $2,500 a month after we realized we were paying for monitoring features we'd never use.

What about building a data lake?

Alexander: [laughs] So many terms and so many different things. Kafka, S3, Athena, Glue... I'm like, I'm more of a "I got a database, here's the table, this is where it goes, I can query it, I can index it properly" kind of person.

For a team of 20+ people, spending months building a data lake means not building product features. The opportunity cost is too high. And honestly, I'm not sure we have the expertise in-house to do it right.

So what happened to the data for those two years?

Alexander: It just... disappeared. We had NGINX logs showing that requests hit the server, but we didn't have the parameters, what was the response, all these other things we needed to debug and figure out what the hell's going on with the client.

People would ask us all the time: "What's the accept rate for the ping? Like, how often do you reject? How often do you accept?" No way to answer that with Datadog. We simply weren't collecting the data because there was nowhere to put it.

That must have been frustrating for your customers.

Alexander: Extremely. Call centers are making million-dollar decisions about which vendors to work with, and they had zero data. They couldn't see which vendors deliver quality leads versus which ones are abusing the system with ping loops - where a seller will sell to another seller, and we'll get pinged multiple times for the same lead.

One example I discovered recently: there's a vendor who pings us five times with five different phone numbers at the same second. Why? Because they're trying to mask the phone number because they think we collect it. We keep our clients honest by redacting everything except the area code, but we didn't even know this pattern existed until we could analyze the logs.

Why TLDCRM Chose Firebolt

Walk us through how you found Firebolt.

Alexander: The number one question was: where do I put my logs? I've been asking this question for over two years to different people. I need something that can ingest high-volume logs but also query them as if they were in a database. And critically, I need my clients to be able to see these logs. That's the requirement that broke everything else.

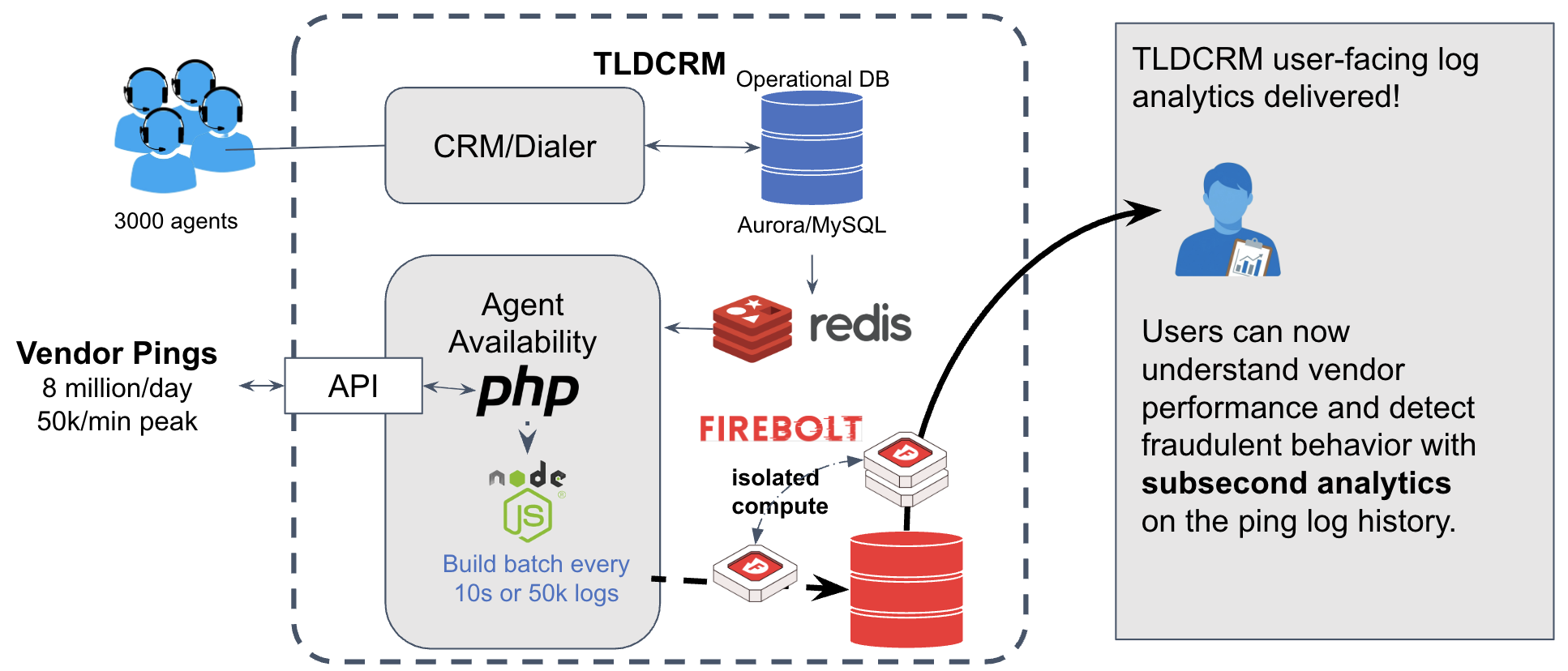

When I looked at Firebolt, a few things stood out. First, it's SQL. I'd prefer SQL because my ORM is SQL-based, so I was able to easily format Firebolt into that.

Second, the way Firebolt handles indexes is a lot smoother than Aurora. Your indexes aren't like Postgres indexes - they're your own thing because you're using the tablets concept. A columnar database where it's a lot easier to search and run aggregations.

Third, Firebolt does really well with bulk inserts. If I'm doing 50,000 logs a minute, it doesn’t make sense to individually insert them and create inefficient fragmentation. So we built a dispatch system that collects the data, aggregates it, then pushes it out every 10 seconds. Firebolt handles those bulk inserts beautifully.

Learn more about Firebolt indexing and pruning mechanisms.

Tell us about that architecture. How does data flow?

Alexander: Our core application is PHP, but we wrote a Node.js connector between PHP and Firebolt. Because we needed that batch aggregation layer.

Here's the flow: The PHP application handling ping requests fires an Stream Event via Redis (avoiding TLS Renegotiation) to a Node.js dispatcher service. It's non-blocking, so the ping response time stays under 0.02 milliseconds - the PHP app doesn't wait for log confirmation.

The Node.js dispatcher collects logs in memory, batching them every 10 seconds. When the 10-second timer hits, it does a single bulk INSERT to Firebolt. We could probably even push it to one second, but 10-second lag is fine for analytics.

If the buffer fills up before 10 seconds, which can happen during traffic spikes, we have an emergency flush at 50,000 rows. And if Firebolt is temporarily unavailable for some reason, we back up the batch to S3 for recovery later.

The log ingestion path uses one Firebolt engine and the customer portal driving analytic queries uses a different one. Each engine is configured for the workload that it serves. So ingestion never interferes with query performance.

Learn more about Firebolt engines and workload isolation.

What does the Firebolt schema look like?

Alexander: Pretty straightforward:

CREATE TABLE vendor_ping_logs (

timestamp TIMESTAMP NOT NULL,

account_id BIGINT NOT NULL,

vendor_id VARCHAR(100) NOT NULL,

vendor_name VARCHAR(255),

phone_area_code CHAR(3),

agent_available BOOLEAN NOT NULL,

accept_reject VARCHAR(20) NOT NULL,

reject_reason VARCHAR(100),

response_code INT,

response_time_ms DOUBLE PRECISION,

full_payload TEXT,

created_date DATE NOT NULL

)

PRIMARY INDEX account_id, created_date, timestamp;

The PRIMARY INDEX enforces tenant isolation - account_id comes first, so every query automatically filters by customer. Then date-based partitioning for time-range queries, then timestamp for ordering within the day.

We also use aggregating secondary indexes optimized for common query patterns: vendor performance analysis, geographic filtering by area code, time-series queries. In MySQL, adding indexes slows down inserts. With Firebolt's tablet storage model and workload isolation, it's not a problem.

What can your customers actually do with this data now?

Alexander: They can access every single log. They can search through them. They have queries, filters. They can add or remove columns. They can click on view and look at the whole payload to see what happened.

The most common query is vendor performance analysis. Which vendors have high accept rates versus low? How many times per day is each vendor pinging us? What's the average response time?

SELECT

vendor_id,

vendor_name,

COUNT(*) as total_pings,

ROUND(100.0 * SUM(CASE WHEN accept_reject = 'accept'

THEN 1 ELSE 0 END) / COUNT(*), 2) as accept_rate_pct,

AVG(response_time_ms) as avg_response_time

FROM vendor_ping_logs

WHERE account_id = :account_id

AND created_date >= CURRENT_DATE - INTERVAL '30 days'

GROUP BY vendor_id, vendor_name

ORDER BY accept_rate_pct DESC;

This query was literally impossible before. Now it runs in under a second on 30 days of data.

We also built an abuse detection query that looks for suspicious patterns - vendors pinging too frequently, cycling through area codes, rapid-fire pings in the same second. That's how we discovered the vendor pinging us five times simultaneously with different numbers.

How do customers access these queries?

Alexander: Through their CRM portal. We built a query interface where they can filter by date range, vendor, status, area code. They can export to CSV. They can run unlimited queries - no rate limits like we would have had with Datadog.

The queries go from their browser, through our PHP backend, to Firebolt. We enforce tenant isolation by always filtering on account_id. Security is built in at the database level.

What about performance? You mentioned queries run in under a second?

Alexander: Yeah, most queries are 200 milliseconds to 2 seconds depending on complexity. We're querying billions of rows at this point - 8 million per day adds up quickly - but Firebolt's query planner and aggregating indexes make queries extremely fast.

And this is with hundreds of users potentially querying simultaneously. No slowdowns. No timeouts. It just works.

Firebolt has proven that it's extremely fast when it comes to aggregations, which has been great for us.

Learn more about Firebolt Aggregating indexes.

Did you have to do a lot of query optimization?

Alexander: Not really. The columnar storage and indexing just work. As long as queries include account_id and a date range, they're fast. We don't have to think about it.

That's another thing I liked about Firebolt in general. The fact that you can have engines turn on and off - you're very cost conscious. If you're not using it, turn it off. Vacuuming the tablets to reduce data storage size. You guys actually care about the data that's going in.

With Datadog, they're just like "throw in whatever." No structure, no preference. I was sending multi-line logs and ingesting five, six, 10, 20 lines every time. If you had told me a year ago, "send logs as a single JSON object with standardized information that you can then use as facets to filter," it would have been nice.

Firebolt Benefits

What changed after implementing this?

Alexander: [pauses] Peace of mind. That's the biggest thing that Firebolt lets me do. I have peace of mind that I have a path forward for high volume data.

Before, I didn't have a path forward. There were so many options, but not as clean as what I was trying to do with my specific requirements. Now I can build more stuff faster, knowing that I have Firebolt in my toolkit.

We're integrating with an AI recording company. They take call recordings, process them, turn them into transcripts, and send the data back. I'm thinking about storing that data in Firebolt because it's going to be infrequently accessed, but the aggregations are necessary, particularly when you're dealing with calculated scoring.

Tell us more about that. What else are you planning?

Alexander: So the AI transcription thing is one use case. Another is recording metadata for clients in maintenance mode.

When a client offboards or goes into maintenance mode - meaning they're no longer active but want to keep access to their data - our biggest challenge is that we tear the dialer down to save money. But then we don't have access to the recording log for those dialers anymore.

I'm looking into an ETL process where I can send the recording log to Firebolt. I can do the bulk inserts very quickly and just have it go into a Firebolt table. Then when they're in maintenance mode, reference Firebolt for their recordings instead of referencing the original dialer.

We're also expanding to all our other API logs. Right now we're only using Firebolt for the vendor ping logs, but we're also expanding it into all of our API logs. We have outbound API logs where we're sending third-party requests - we need to log those too.

All my ETL processes will likely go through Firebolt in some way or fashion eventually. At least I know Firebolt can handle it and it's not going to affect production. That's the other big thing - I don't want it to affect prod, which is the main system. The CRM needs to be a relational RDBMS, and classic MySQL can work just fine for that. But the log writing is high volume.

You mentioned this gives you a competitive advantage?

Alexander: Absolutely. We compete with standalone dialers that don't have CRMs, and we compete with Salesforce plus Five9, which requires buying two systems and hiring consultants to integrate them.

But now we also compete on transparency. Our customers can see exactly which vendors deliver quality versus which ones are abusing the system. They can detect fraud patterns. They can optimize operations with data that competitors simply can't provide.

That's not something you can copy by looking at a UI. That requires solving the same infrastructure problems we just solved. And that takes time.

What was the experience like working with the Firebolt team?

Alexander: I really liked the onboarding process with Firebolt and the customer service you guys give. Datadog was absolutely abysmal. They sold us on features that didn't work the way we needed.

With Ilan and Connor - your team - they've been amazing. I know I throw really complex technical questions at them because I really think things through. But you've been great. I mean, I told them the other day, if I didn't own the company and I wanted to get a new job, I would go ask Firebolt, "Can I work with you guys?" [laughs] It would be fun and interesting.

When you're doing a complex integration like this, responsive technical support isn't a nice-to-have. It's the difference between success and failure.

Any advice for other SaaS companies facing similar problems?

Alexander: If you're asking "where do I put my logs?" and you've been asking for more than a few months, you're probably stuck in the same traps I was.

Production transactional databases can't handle high-volume writes. Observability tools aren't designed for customer access. Data lakes are too complex for small teams.

You need something purpose-built for high-volume writes, customer-facing queries, SQL interface, predictable costs. That's what Firebolt gave us.

The other thing is, don't let perfect be the enemy of good. We're using 10-second batch windows. That's not real-time, but for our analytics use case, it's perfectly fine. The system didn't have to be perfect - it just had to exist, which it didn't for two years.

Last question: Was it worth the two-year wait?

Alexander: [laughs] I wish we'd found this solution two years ago. But yeah, it was worth it because now we have it right. We're not going to have to rebuild this in six months.

The logs are flowing. The customers are happy. I can sleep at night knowing that when we launch a new feature that generates data, I know where to put it.

That peace of mind? That's everything.

Firebolt: Thank you Alexander, at Firebolt we’ve built and continue to build the analytic database for engineers to help them tackle complex data problems like yours and it’s great to see innovative leaders like yourself embracing it as the right tool for the job.

Additional Resources:

- Explore Firebolt's documentation.

- Start your free trial.

About TLDCRM: Total Lead Domination (TLDCRM) is a cloud-based SaaS CRM and VoIP dialer platform built specifically for health, life, and ancillary insurance call centers. Founded in 2013, TLDCRM serves 330+ insurance agencies and call centers with a unified platform that combines CRM, predictive dialer, policy management, and commission tracking. Learn more at tldcrm.com.

About Firebolt: Traditional warehouses and lakehouses force you to choose between performance, cost, and simplicity. Firebolt delivers all three on a single analytical database platform with the best price-performance in the market so you can ship faster without compromises.

We're built for companies that need their data platforms to do more — run AI workloads, power sub-second customer-facing analytics at scale, or execute ELT jobs faster at a fraction of the cost.. Learn more.