Listen to this article

TL;DR

Firebolt supports explicit, multi-statement transactions using the familiar BEGIN, COMMIT, and ROLLBACK syntax. You can now group DML, DDL, and DCL statements into a single, atomic operation that maintains full ACID compliance while preserving the value of Firebolt's stateless architecture.

These transactions can span different compute clusters, provide snapshot isolation, and continue running even during compute cluster replacements or upgrades, with no downtime. This article explains the architectural decisions that make this possible, focusing on how Firebolt's centralized metadata service enables this capability

Syntax:

BEGIN TRANSACTION;

-- Read data to inform next steps

-- SELECT ...

-- Perform data modifications (DML)

-- INSERT, UPDATE, DELETE, MERGE

-- TRUNCATE, DROP PARTITION

-- Modify the database schema (DDL)

-- CREATE / ALTER / DROP TABLE/DATABASE

-- Manage permissions (DCL)

-- GRANT / REVOKE ...

COMMIT TRANSACTION;Why You Need Multi-Statement Transactions

Grouping multiple INSERT, UPDATE, and DELETE statements into a single atomic unit is fundamental to data integrity. Without this capability, partial failures can leave your data in an inconsistent state.

Consider a common ETL/ELT pattern where you update a fact table and its related dimension tables simultaneously. All updates must succeed or fail together to maintain consistency across your data model.

An even more demanding scenario is blue-green deployment: replacing a production database or table with a new version from staging. This involves a sequence of DROP and ALTER RENAME TO commands that must execute atomically. Partial success could leave your production environment broken.

In production-critical data analytics, atomicity isn't optional, it's essential. The challenge is providing these guarantees within a stateless, distributed architecture.

Decoupling transactions from compute clusters

Traditional data platforms often tie ACID transactions to a single, stateful compute node. While familiar, this approach creates rigidity that can't handle modern, distributed workloads. You sacrifice elasticity and resilience because a single point of failure can disrupt your entire workflow.

Firebolt decouples transactions from the compute layer. Transaction state is managed centrally by the metadata service, allowing transactions to be long-running and resilient to infrastructure changes.

A single transaction can seamlessly span compute cluster replacements, scaling events, or online upgrades without interruption or downtime. You can perform complex, multi-step transactions without worrying about the underlying compute cluster infrastructure.

This is a unique capability in cloud data platforms, providing integrity and control that works with, rather than against, a stateless, cloud-native architecture.

Why Firebolt uses centralized metadata architecture

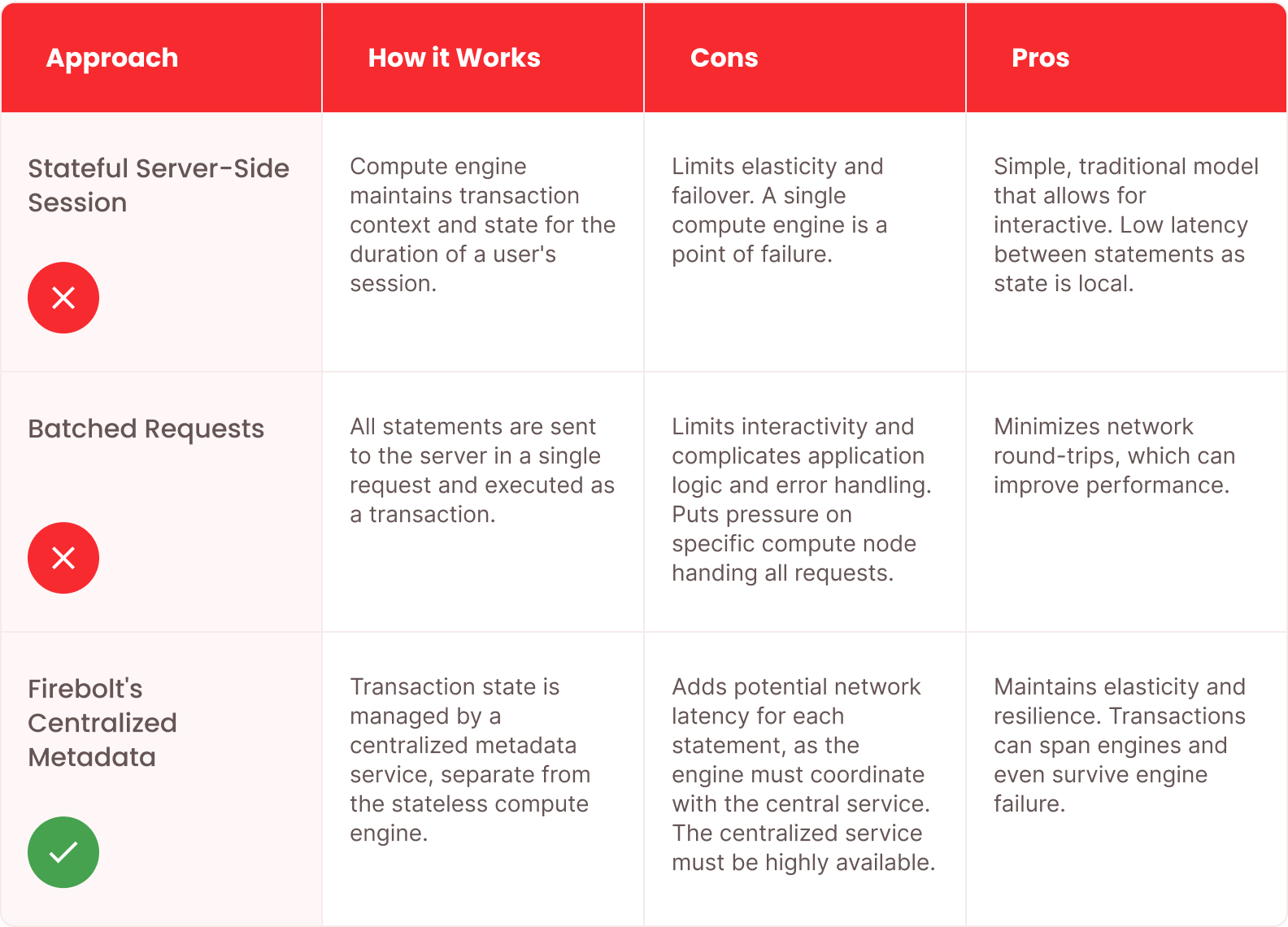

This table compares different approaches to transaction management in distributed systems:

Stateless execution with metadata-coordinated transactions

Firebolt's approach to explicit transactions leverages a centralized metadata service that is decoupled from compute clusters. This service acts as the single source of truth for all transaction states and content.

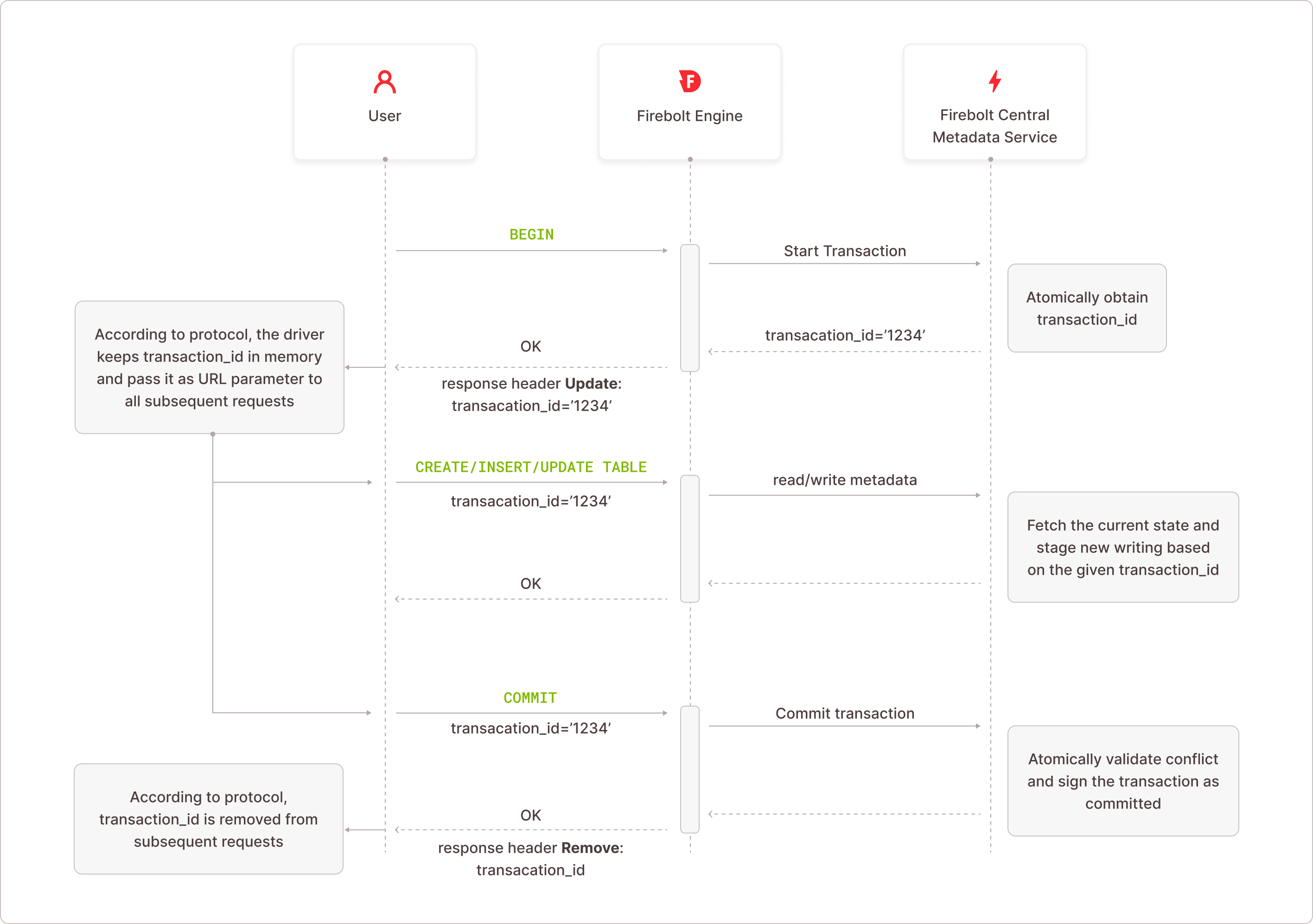

When you issue a BEGIN command, the metadata service provides a unique transaction ID. This ID serves as the identifier for an isolated workspace in the metadata service where all metadata changes, followed by INSERT or UPDATE operations, are staged.

These changes are initially available only to your session using that specific transaction ID. Once you commit the transaction, the metadata service atomically validates and marks the transaction as complete, making the staged data visible to all future requests.

This design ensures that compute clusters remain stateless and interchangeable, allowing transactions to maintain full ACID compliance even as they span different compuete clusters and resources. You never need sticky sessions or compute cluster affinity.

Metadata service architecture and design

The metadata service stores all system information that isn't user data. This includes table and database structure definitions, access control permissions, and other system-level information. Your actual data is stored separately in a data lake. The metadata service only stores metadata pointers called "tablets" that serve as a map to where your data resides.

The metadata service is built on FoundationDB, a distributed, transactional key-value store renowned for its high performance, strong consistency, and ability to handle large-scale distributed workloads with millisecond latencies.

This service maintains all metadata transactionally in the order it was committed, allowing Firebolt to construct a complete picture of the database state at any given point. To ensure strict, chronological ordering between transactions, Firebolt uses a Log Sequence Number (LSN) that acts as a global, monotonically increasing timestamp for the entire database.

Understanding the Log Sequence Number (LSN)

Think of the LSN as a precise timestamp that marks when each transaction begins and commits. It ensures transactions execute in a strict, predictable order.

Firebolt generates this LSN using FoundationDB's Versionstamp feature, which embeds the unique transaction's commit version into a key or value. This produces a textually sortable 20-hex-character LSN (e.g., 00000000f03ac8b10000) used extensively for transaction identification and for storing cache entries on the database compute cluster.

When a transaction begins, it receives an LSN (called begin_lsn) that defines the "snapshot" of the database the transaction will see. This ensures your transaction starts with the most up-to-date data, including all data from transactions that committed before it.

When the transaction commits, it receives a new LSN (called commit_lsn), which is guaranteed to be greater than any previous LSN. This commit_lsn marks the point at which changes become visible to other transactions.

This process guarantees that commit_lsn > begin_lsn for any given transaction. More importantly, the begin_lsn of the next transaction to start will be greater than the commit_lsn of the previous one. This strict, chronological ordering prevents transactions from seeing or interfering with each other's in-progress changes, maintaining the isolation and consistency of the database.

Building the transaction log

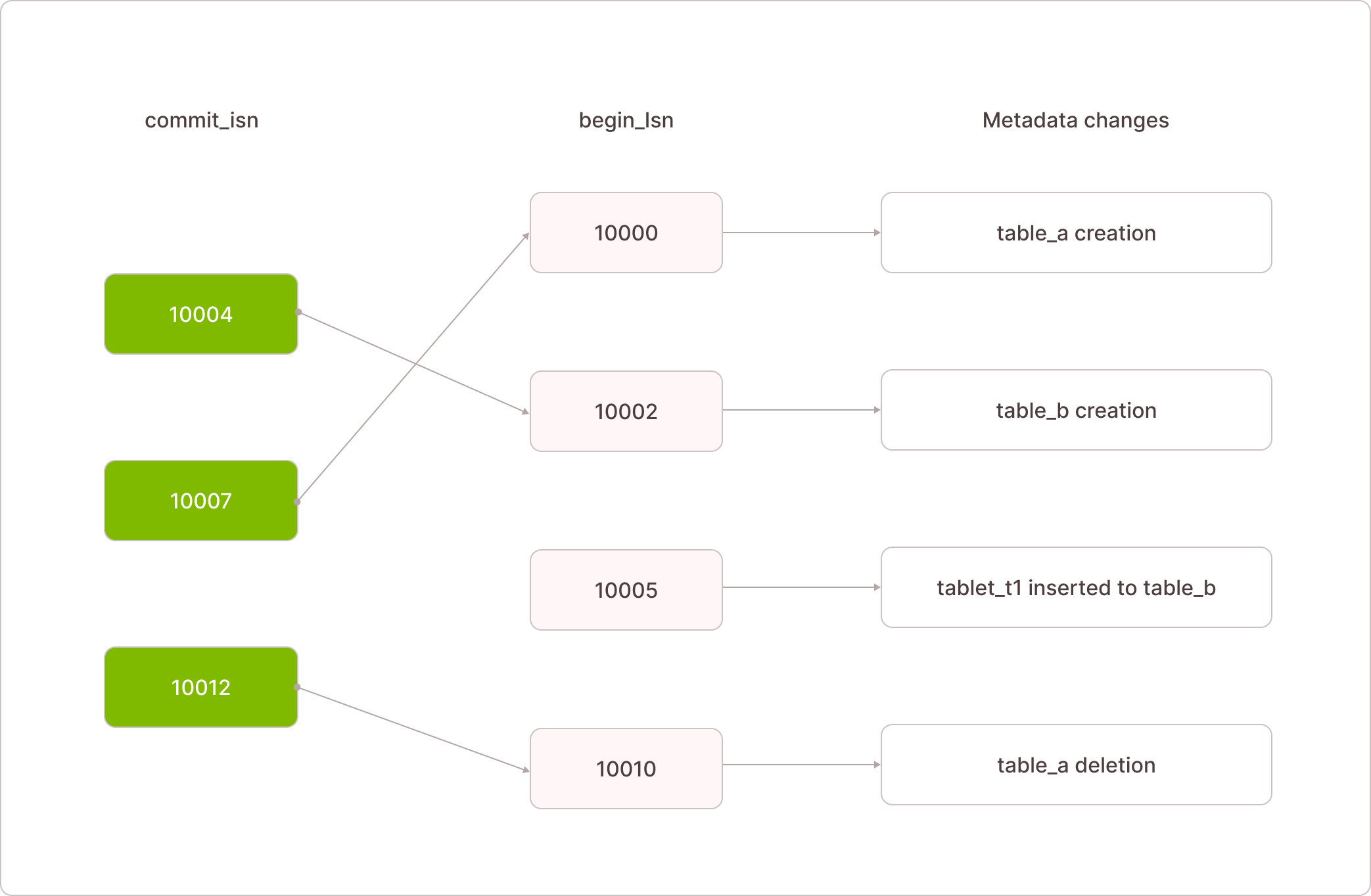

To ensure atomicity and isolation, Firebolt creates a dedicated transactional workspace for every transaction, whether explicit or implicit. All changes, from a single row update to a new table creation, are logged to a Write-Ahead Log (WAL) identified by the unique begin_lsn.

This log-based approach ensures data durability and acts as the building block for the entire transactional system. Changes written in a transaction are only accessible via the unique begin_lsn, guaranteeing they remain completely isolated from other transactions until they are officially committed.

The commit process makes these changes visible to the rest of the system. Instead of rewriting or moving the data, the transaction simply receives a new, unique commit_lsn. This commit_lsn serves as a pointer to the begin_lsn, making the changes permanently visible and part of the official transaction history.

This allows Firebolt to replay the transaction log in the correct commit order to reconstruct a snapshot of the database at any given point in time across all compute clusters.

To read the transaction log in the correct order, Firebolt first reads the list of ordered commit_lsn values. Each commit_lsn acts as a redirection to the corresponding begin_lsn that holds the data, allowing the system to accumulate the content needed to construct a consistent snapshot.

Key concepts:

- The begin_lsn defines a transaction's consistent snapshot, and its order reflects when transactions started.

- The commit_lsn is a globally ordered and sequential number that defines the final, true order of transactions, used to replay the transaction log and reconstruct a consistent system state.

Example: A transaction with begin_lsn 10010 can see and delete a table because it began after the table's creation was committed at commit_lsn 10070.

Another example: A transaction with begin_lsn 10005 can insert data into a table because it started after the table was committed. However, if that transaction is not committed, its data remains invisible to others.

Indexing WAL for Optimized Read Patterns

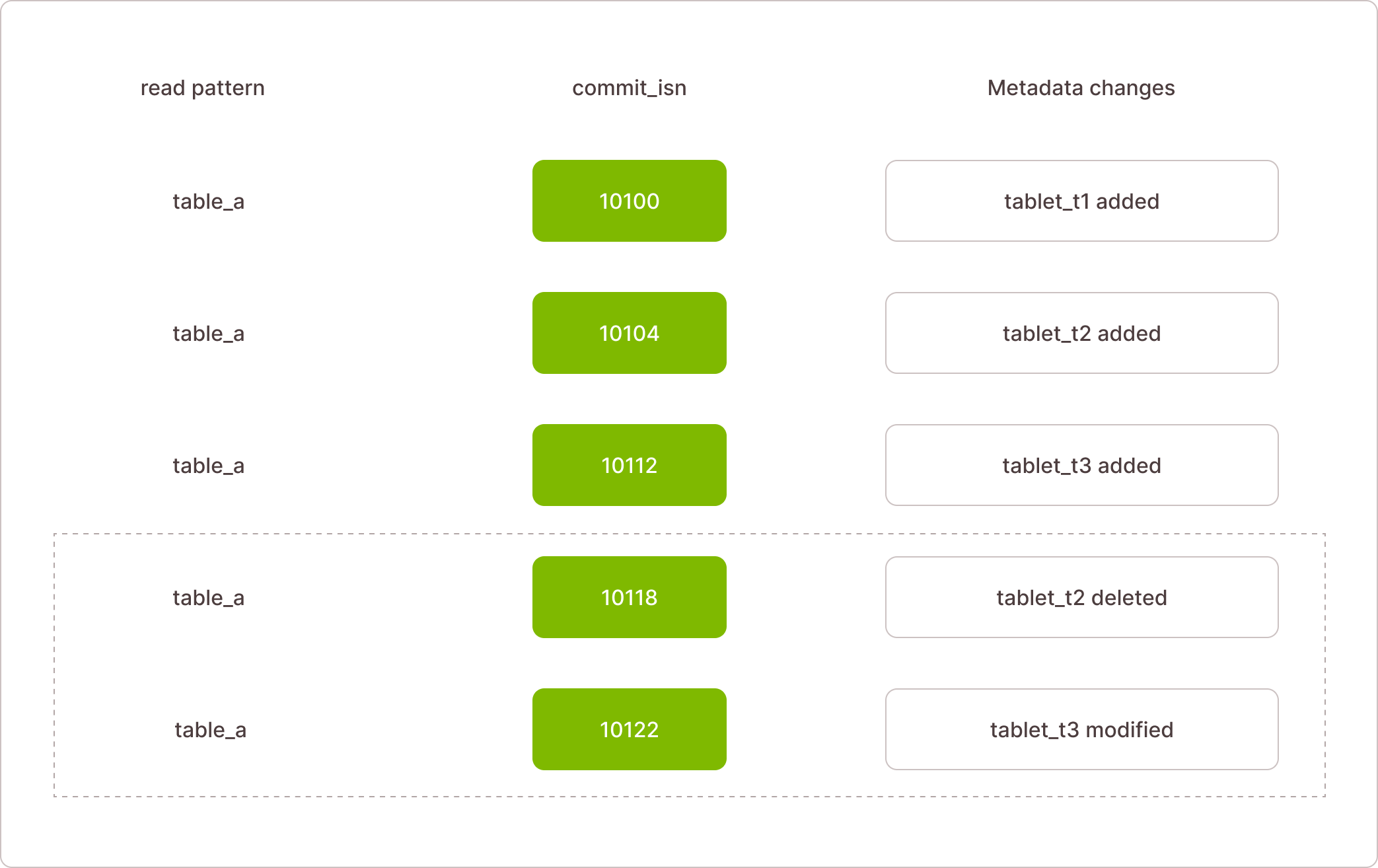

To avoid scanning the entire transaction log for every query, Firebolt maintains a crucial indexing layer on top of the WAL. These indexes quickly differentiate which committed changes are relevant to a given query, dramatically improving read performance.

The indexes are created by storing metadata changes, such as new or modified tablets and objects, in a separate, structured way. They are organized based on common access patterns (e.g., per-database or per-table) and sorted by their commit_lsn.

This approach provides two main benefits:

Single range read: The underlying key-value store is highly optimized for reading a range of ordered data instead of many small reads. By using these indexes, a query can access all relevant log entries in one operation. This avoids the inefficiency of many small, scattered reads and reduces I/O and latency.

Targeted access: The indexes enable reading only what you need, allowing a query to request only the portion of the log relevant for specific tablets or objects, within a specific time frame. This means you can read only the incremental changes from a recent snapshot the compute cluster already holds.

These indexes transform the WAL from a simple append-only log into a highly performant and queryable data structure.

WAL index structure:

The WAL index sorts data first by the read pattern (e.g., database for reading schema, table for reading table data), then by commit_lsn.

The index allows for a single, efficient read of a specific table, even within a given commit_lsn range. For example, if an compute cluster already knows the table's state up to commit_lsn 10112, it can request only the incremental changes in the range (10112, 10122] and apply those changes.

Compacting the Transaction Log into Snapshots

To manage the growth of the transaction log, Firebolt periodically compacts the recent transaction tail into a single, concise snapshot. This process creates a deterministic picture of the system at a given point in time, permanently applying all committed deletions and modifications and eliminating them from the log.

This compaction serves a crucial purpose: it allows Firebolt to safely remove old records from the transaction log and its indexes. After a snapshot is created and has passed a defined retention period, the log entries it replaced can be safely deleted.

This retention period enables features like time-travel, allowing you to reconstruct a system snapshot from a requested time in the past. The oldest snapshot you can use to reconstruct the system's state is determined by the retention period (also called the time horizon).

Since Firebolt regularly compacts older transaction logs into snapshots and then deletes the logs, you cannot construct a view of the system before this time horizon. This is why the time-travel feature is limited to the defined retention period.

A long-running explicit transaction is not a problem even if it runs for hours and tries to construct an "old" system state, because the data retention period is much larger than the transaction's lifetime.

Isolation levels and conflict handling

In a system with parallel transactions, a conflict occurs when two or more operations logically contradict each other, potentially leaving the database in an inconsistent state. To handle this, Firebolt employs Optimistic Concurrency Control (OCC).

This approach assumes conflicts are rare, allowing transactions to proceed without locks. Conflicts are detected during a final validation step before a transaction commits. The key difference between isolation levels is the specific types of conflicts they prevent using this OCC mechanism.

Isolation levels

Strict serializability: For critical metadata operations (e.g., creating or dropping a table), Firebolt applies strict serializability, the strongest isolation level. The OCC system checks for both read-write and write-write conflicts to ensure all structural changes are globally ordered.

For example, a transaction attempting an INSERT will fail if the target table was concurrently dropped, as this is a read-write conflict on the metadata.

Snapshot isolation: For data operations (e.g., SELECT, INSERT, UPDATE, DELETE), Firebolt uses snapshot isolation. A transaction is given a consistent view of the data from when it began, regardless of concurrent changes, which eliminates read-write conflicts.

Therefore, the OCC mechanism only needs to check for write-write conflicts, aborting the transaction only if it tries to modify data that another concurrent transaction has already committed.

How Firebolt enforces isolation

To enforce the specific isolation levels described above, Firebolt tracks every transaction's activity using a system of objects called dependency tags. These tags are the key to detecting conflicts during the commit phase.

Here's how it works:

Tracking during the transaction: Following an operation such as INSERT, when a transaction performs a WRITE or READ of metadata, the compute cluster creates a simple record, a dependency tag. This tag notes the type of operation (read or write) and the specific data involved.

Validation check: When a transaction is ready to commit, the metadata service examines all the tags created by other transactions that have committed since this transaction began.

Conflict detection: The metadata service then checks for conflicts. For example, to prevent a read-write conflict, it checks if any of the data this transaction READ has been written to by another committed transaction. If it finds a match, the transaction is immediately prevented from committing.

This process provides a flexible and precise way to enforce isolation. Firebolt simply configures the system to check for the specific types of conflicts required by each isolation level, preventing write-write conflicts for snapshot isolation and both read-write and write-write conflicts for strict serializability.

Example of read-write conflict validation

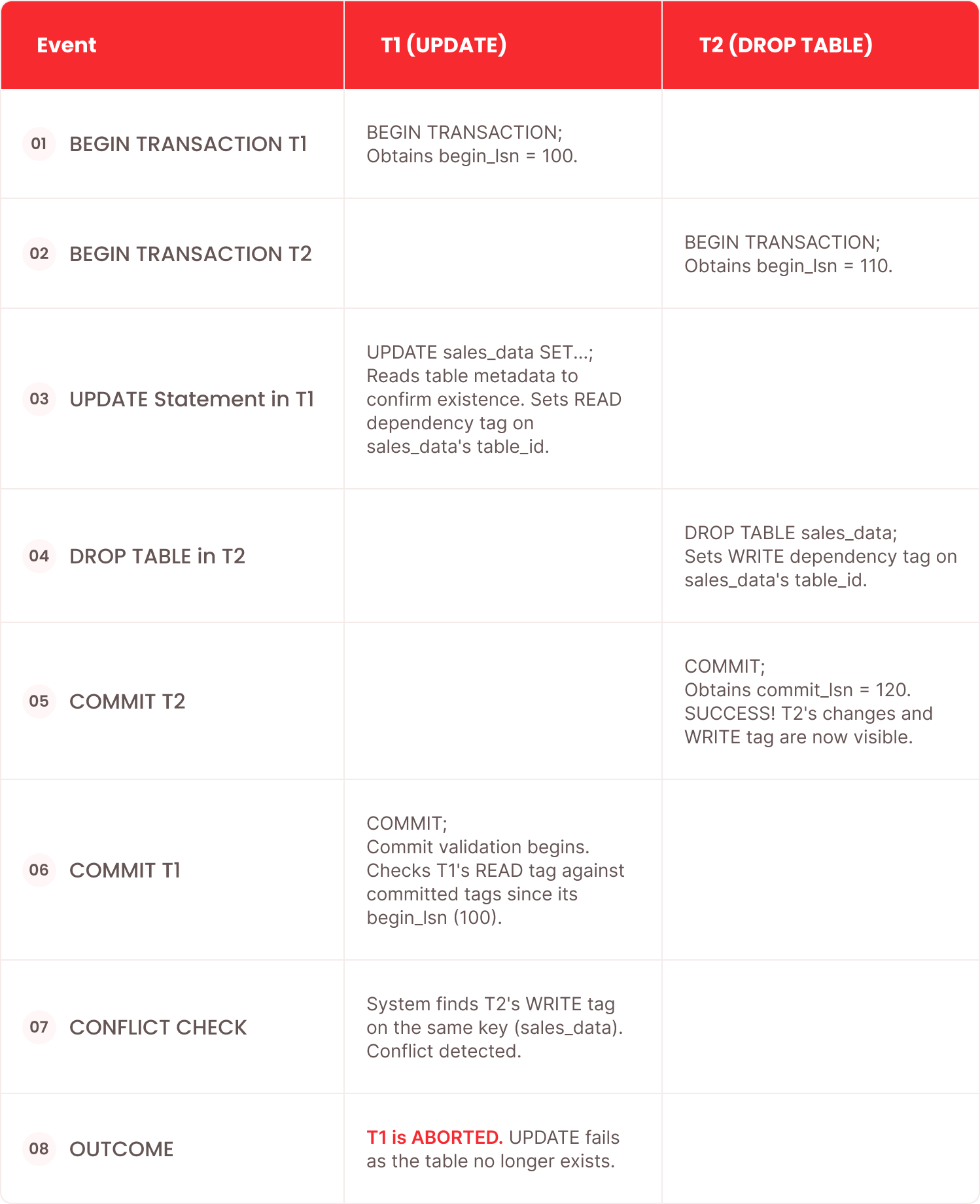

Consider two concurrent transactions, T1 and T2, interacting with a table named sales_data.

T1 begins an UPDATE operation on a row. It first reads the sales_data metadata to confirm the table's structure. The compute cluster registers a dependency tag for a READ on the sales_data metadata key.

T2 begins a DROP TABLE operation on sales_data, which is a structural change. The compute cluster registers a WRITE dependency tag on the sales_data metadata key.

T2 commits successfully. T2's WRITE dependency tag on the metadata key is recorded in the committed log.

T1 attempts to commit. The system checks T1's READ dependency tag against committed tags since T1 began. It finds a conflict with T2's WRITE tag on the same key.

T1 is aborted. Because T1's operation depended on a state that was concurrently changed, it cannot commit. This mechanism ensures the system's structural integrity by enforcing strict serializability for metadata operations.

Example:

In an explicit multi-statement transaction, you don't need to wait until COMMIT to realize there is a conflict. To improve your experience, Firebolt performs this validation after every statement rather than only at commit. This "fail-fast" behavior gives you earlier feedback on conflicts, saving time and resources.

Controlling Sequential Execution Within a Transaction

In a distributed system where any statement can be routed to any compute resource, ensuring sequential execution within a single transaction is crucial. A transaction depends on a consistent snapshot to operate, so allowing multiple write operations to run in parallel within the same isolated transactional space could introduce inconsistencies before the transaction is verified and committed.

For this reason, Firebolt explicitly restricts parallelism within a single transaction, rather than allowing undefined behavior like some other database systems.

Firebolt achieves this by generating a sequence_id along with the transaction_id. On every statement, the sequence_id is atomically checked and updated in the centralized metadata service and then sent back to the client.

The client, when submitting a subsequent statement in the same transaction, must attach the correct sequence_id in the HTTP headers. If the provided sequence_id does not match the expected value, the transaction is immediately aborted.

This mechanism guarantees that statements within a transaction execute sequentially, maintaining integrity even in a highly distributed environment.

Security Considerations

Security enforcement is real-time: Every statement in a transaction is individually authorized based on role permissions.

RBAC (Role-Based Access Control) is always checked against the most recent state of permissions, regardless of when the transaction began. This ensures that if your privileges are revoked mid-transaction, your next statement is immediately blocked. Access control is never stale.

Real-time Security Enforcement

The crucial point is that permission checks use the current, up-to-date state rather than the snapshot state at the beginning of the transaction. While data operations within a transaction see a consistent snapshot defined by the begin_lsn, security checks must always query the most up-to-date, globally committed state of the system's permissions.

This means that a separate read is performed on the permissions metadata for every statement to ensure up-to-date state. Even if a transaction has been running for a long time, its access is validated against the very latest security policies, preventing any staleness.

Atomic DCL Within Transactions

A powerful and unique feature of Firebolt's security model is its full support for Data Control Language (DCL) operations within transactions. You can create a new table and atomically GRANT permissions on it in the same transaction. This guarantees that security policies and schema changes are applied simultaneously, preventing any inconsistencies between access controls and database structure.

Just like other metadata changes (e.g., CREATE TABLE), DCL operations like GRANT and REVOKE are also written to the isolated transactional space. They are represented as specific objects in the transaction's WAL (Write-Ahead Log).

When the transaction successfully commits, both the new table and the corresponding GRANT are simultaneously made visible to the rest of the system using the commit_lsn. This prevents a race condition where a table is created and visible, but its intended permissions haven't been applied yet, ensuring the system is never in an inconsistent state.

Conclusion

Firebolt delivers robust multi-statement transactions without introducing a stateful compute layer. The centralized metadata service, powered by an append-only Write-Ahead Log, ensures data durability and consistency.

This architecture allows for the atomic execution of DML, DDL, and DCL operations within a single transaction, a capability that sets Firebolt apart from platforms like Snowflake and Databricks. As a result, you can:

- Run transactional statements across different compute clusters

- Replace compute nodes mid-transaction without losing state

- Atomically update both data and schema in a single transaction

This approach preserves the core benefits of Firebolt's cloud-native design that optimises elasticity, resilience, and statelessness, while delivering one of the most powerful and highly requested database features.