Listen to this article

Preamble

In modern cloud-native environments, ensuring only trusted container images run in production is crucial for security, compliance, and reliability. But enforcing strict image verification often comes with challenges like high latency, unpredictable failures, and limited visibility, especially when relying on third-party tools. At Firebolt, we faced these exact challenges. This blog walks you through our journey of building Auror, an open source high-performance, custom Kubernetes Admission Webhook that validates container images efficiently and transparently.

Until recently, we relied on a third-party tool to allow only signed images to run in our clusters but it presented two key challenges: unpredictable latency especially for cross-region Elastic Container Registry (ECR) calls and lack of visibility in terms of metrics. As it was a third-party tool, we did not have much control over it.

To address these issues, we built a custom image admission controller that allows only images from our ECR with valid signatures to run in production. We will dive deep into how we replaced the third-party image admission controller with our own custom solution which allowed us to run only images from our ECR with a valid signature. Then we’ll dive into how our caching mechanism mitigated the high latency during cross-region calls and resulted in sub-milisecond on cache hits, and how it allowed us to see custom metrics such as external images (coming from outside of our ECR) and cache hits/misses.

What Is a Kubernetes Admission Webhook?

In Kubernetes, an admission webhook is an HTTPS endpoint that the API Server contacts to determine what to do with objects when they are created or updated. A webhook is registered by creating an admission webhook configuration (via Kubernetes resources such as MutatingWebhookConfiguration and ValidatingWebhookConfiguration), which instructs Kubernetes on which API operations (e.g., CREATE, UPDATE) and which resources (e.g., Pods, Deployments) should trigger a request to the webhook.

One of the important fields to configure is failurePolicy. It specifies how to handle unrecognized errors or timeouts from the admission webhook. It can have two values: Ignore and Fail.

Ignore means that if the webhook call results in an error or timeout, the request is allowed to proceed. Fail means that in the case of an error or timeout, the request is denied. By default, failurePolicy is set to Fail.

Those API Requests are wrapped inside the AdmissionReview which is a standardized API object used in Kubernetes to structure requests and responses between the Kubernetes API server and admission webhooks. They are received from the webhook as POST requests. According to the type of the admission webhook, the webhook can inspect or modify the object and return an Admission Review response, denying or approving the object.

There are two main types of admission webhooks:

- ValidatingWebhook: Reads the object creation request and either approves or denies it. Ideal for checks like “Is this image signed?”

- MutatingWebhook: Can modify or add fields to the object creation request, such as labels or annotations, before denying or approving.

Why Our Own Image Admission Webhook?

While several existing solutions are available, developing an in-house alternative requires substantial time and resources. You need solid reasons to abandon third-party tools and create an internal custom solution. We had our reasons:

Latency Spikes & Timeouts

With our third-party tool, we encountered timeouts especially during cross-region ECR calls. Same-region calls often took hundreds of milliseconds, and cross-region calls could even time out under load up to 8 seconds. We had no way to tune our third-party tool to address these latency issues.

Lack of Metrics

Due to a lack of reported metrics, we couldn’t track how many images passed or failed validation. Also we could not track the data regarding external images.

Desire for Full Control

We wanted to own the validation flow, integrate rich metrics, and optimize for our exact use-cases. As our main goal was to verify images based on signatures, our solution was more lightweight.

Crafting Auror: A Kubernetes Admission Webhook

Let’s move beyond the abstract parts and jump into technical details which made Auror thrive. The main purpose of Auror is to allow only signed images hosted in our ECR to run in our clusters.

Auror is implemented as a ValidatingWebhook. It performs two checks when an image admission request comes from the Kubernetes API Server:

- Registry check: Verify the image comes from our ECR

- Signature check: Confirms the image contains a valid signature created with Cosign (an open-source container signing tool).

If either check fails, the pod is denied; otherwise, it is approved. By checking external images in the first place, we reduce unnecessary calls to ECR and reject those images directly since we know they do not exist in our ECR. Afterwards we add them into the metrics to track them. Only non-external images that do not exist in our local cache trigger ECR calls.

if len(externalImages) > 0 {

if kind := admissionReview.Request.Kind.Kind; kind == "Deployment" || kind == "StatefulSet" || kind == "DaemonSet" || kind == "CronJob"

{

metrics.RecordExternalImage(ctx,

admissionReview.Request.Namespace,

admissionReview.Request.Kind.Kind,

admissionReview.Request.Name)

}

v.logger.Error("Found external images that will not be validated",

"images", externalImages, "namespace", admissionReview.Request.Namespace, "name", admissionReview.Request.Name, "kind", admissionReview.Request.Kind.Kind)

v.handleFailedVerification(w, &admissionReview, "", fmt.Sprintf("%v", externalImages), fmt.Errorf("Found external images that will not be validated"))

return

}To avoid redundant ECR lookups for already-validated images, we adopted a three-tier in-memory cache mechanism utilizing Ristretto (an open-source cache library):

- Tag Cache: for short lived tag lookups. Speed verification for a short duration.

- Digest Cache: for long-lived, signature verified digests. Best approach, we never doubt if the tag is the same but the digest is different.

- Owner Cache: for dependent objects of the owner resource. In Kubernetes, some objects are owners of other objects. These owner objects carry the same uid value in their metadata.ownerReferences.uid field as their owned objects. As we cache the UID of the owner resource, subsequent requests from the owned objects are validated directly, as they carry the same UID. This effectively speeds up the validation process.

How to Be Sure We Do Not Break Things?

To ensure that our new Auror admission flow won’t interfere with workloads in production, we had to first test our solution. We followed a rollout plan to achieve it. Let's have a look at those steps:

1. After our initial tests in our local kind cluster, we deployed it into our staging cluster without enabling the ValidatingAdmissionWebhook. To make our tests, we utilized our internal CLI tool “Officer” which we used to send mock admission review requests to our webhook. We ensured that it validates images correctly.

2. We enabled the ValidatingAdmissionPolicy by adding it as an argument in audit mode through Auror’s Helm chart’s values.yaml file. External images and images without a signature were caught and printed as warning log messages. Resource creation requests for resources containing a podSpec (e. g. Pods, Deployments) were still allowed.

// If audit mode

if v.mode == "audit" {

warningMessage := "WARNING: Allowing " + review.Request.Kind.Kind + " creation in audit mode: " + message

v.logger.Info(warningMessage)

v.sendResponse(w, review, true, warningMessage)

return

}

// If deny mode

v.logger.Info(message)

v.sendResponse(w, review, false, message)3. After running Auror in Audit mode in production for over two months, we reviewed the findings and communicated with other teams to either sign unsigned images or carry external images into our ECR. With these issues being addressed, we have plans to transition from Audit mode to Deny mode where unsigned or externally hosted images will be strictly blocked.

Observability

As it is our in-house solution, we log details about the external images, the unsigned images, and the signed images. Via our logging system, we catch those logs and create alerts for the following scenarios:

- External images. We can check their CVEs, image scan results and source code if possible. Then migrate to our ECR after validation and sign them.

- Unsigned images in our ECR. These alerts help us identify internal images that haven’t been signed. We sign them and let them run securely in our pods.

- Signature mismatches. These may indicate a CI/CD pipeline bug or a potential supply chain attack. This way we can intervene early and investigate the source.

We also emit Prometheus metrics for external, cache hit/miss ratios, and for latency.

As one of the exposed metrics, cache_entries tracks the current number of items in each cache tier (digest, owner, tag). It is used to monitor cache utilization to adjust cache_size.

# HELP cache_entries Number of entries in each cache

# TYPE cache_entries gauge

cache_entries{cache_type="digest"} 2

cache_entries{cache_type="owner"} 17

cache_entries{cache_type="tag"} 17external_images_total represents the total number of admission requests for images coming from outside our ECR. In Grafana dashboards, we can track the external images with their namespace and name observed.

# HELP external_images_total Total number of external images encountered

# TYPE external_images_total counter

external_images_total{kind="Deployment",name="e2e-mock",namespace="test-1} 1The third-party tool that we relied on lacked the necessary Prometheus metrics which prevented a meaningful performance comparison with our in-house solution. After addressing its core limitations — such as cross-region timeouts and high admission latency — by developing our admission controller, we benchmarked it against the most widely used open-source admission validation webhook Kyverno. Let‘s have a look at the reasons why we chose to go with our in-house solution:

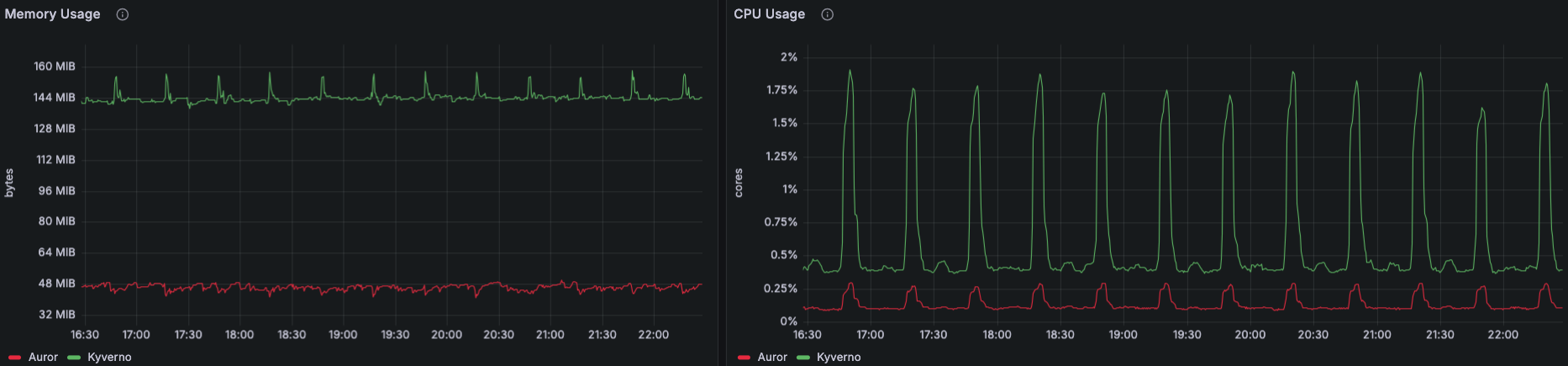

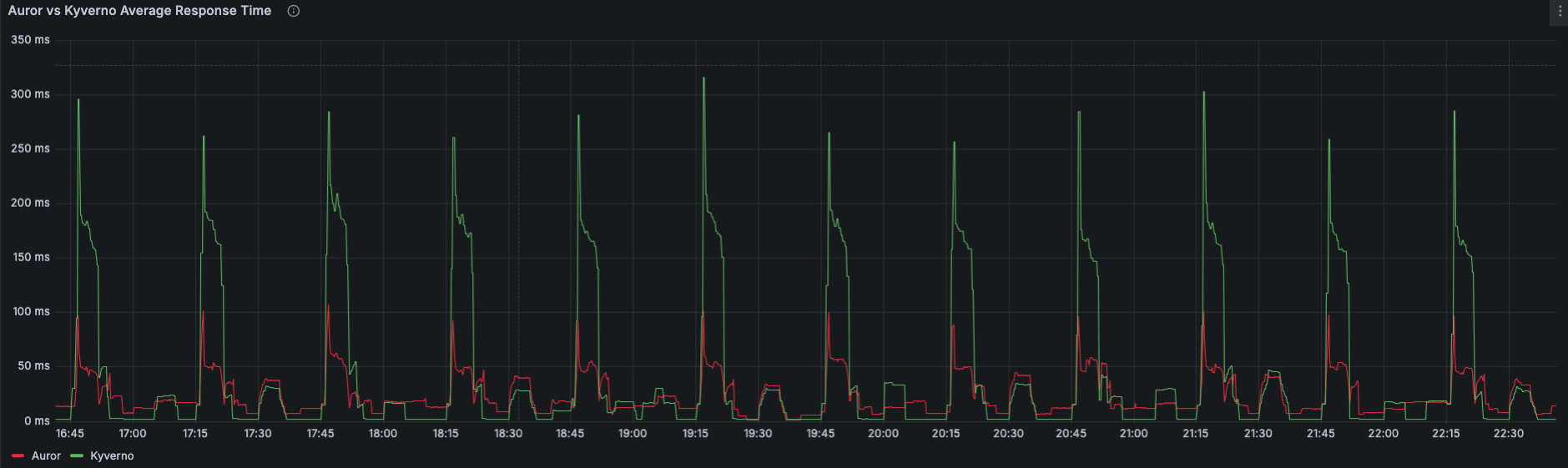

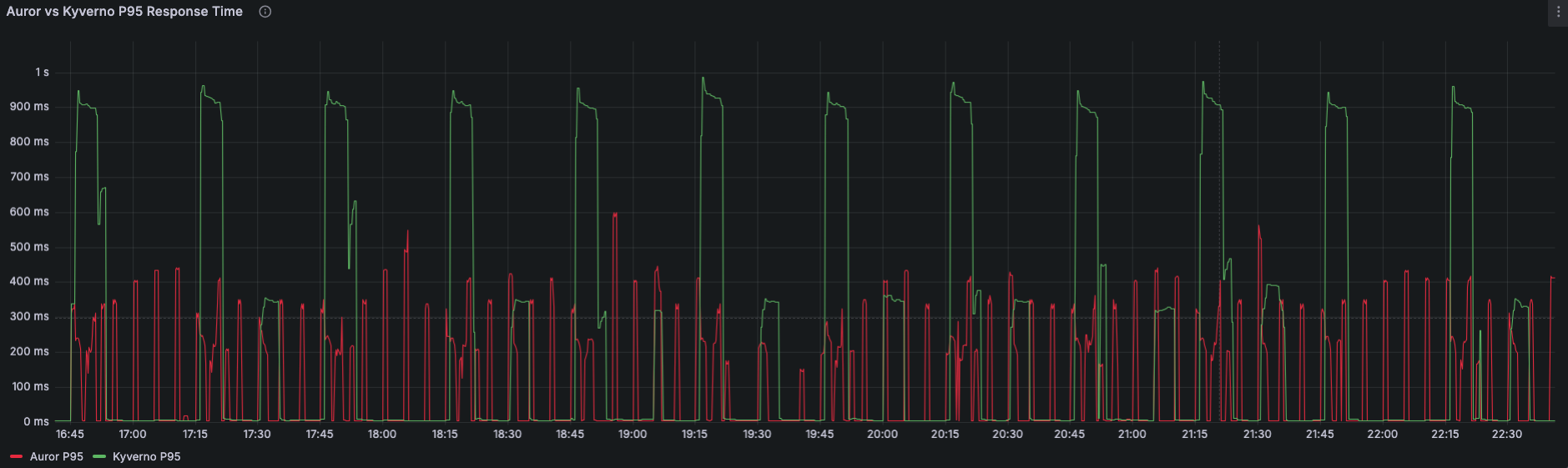

Performance: Auror’s two-tier caching consistently delivers faster response times than Kyverno, with average lookups typically well under 30 ms and P95 latencies staying below 400 ms. In contrast, Kyverno’s P95 response times frequently exceed 600 ms and can reach up to 1 second under load.

Resource Usage: Kyverno’s broader policy engine uses more than three times CPU and memory compared to Auror’s image-validation logic.

Control & Cache Logic: We maintain full, low-level control over cache behavior (tag vs. digest) and never mutate resources by default. Kyverno, by contrast, is driven by policies that can mutate or annotate objects and only offers partial caching in Audit mode .

Extensibility: Kyverno shines when you need mutation, generation, or rich policy types beyond images. Auror is purpose-built for signature enforcement—simpler, but less general.

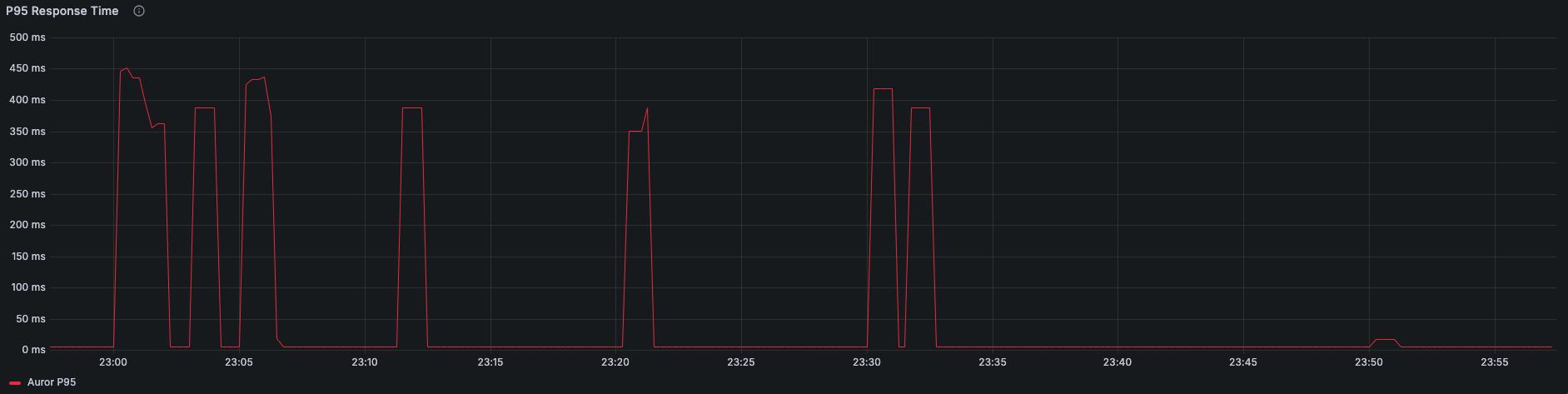

When we look into P95 dashboards, we see that worst case peaks are much higher for Kyverno. In general, those results made it clear that we better go with our in-house solution.

Was It Worth The Hustle?

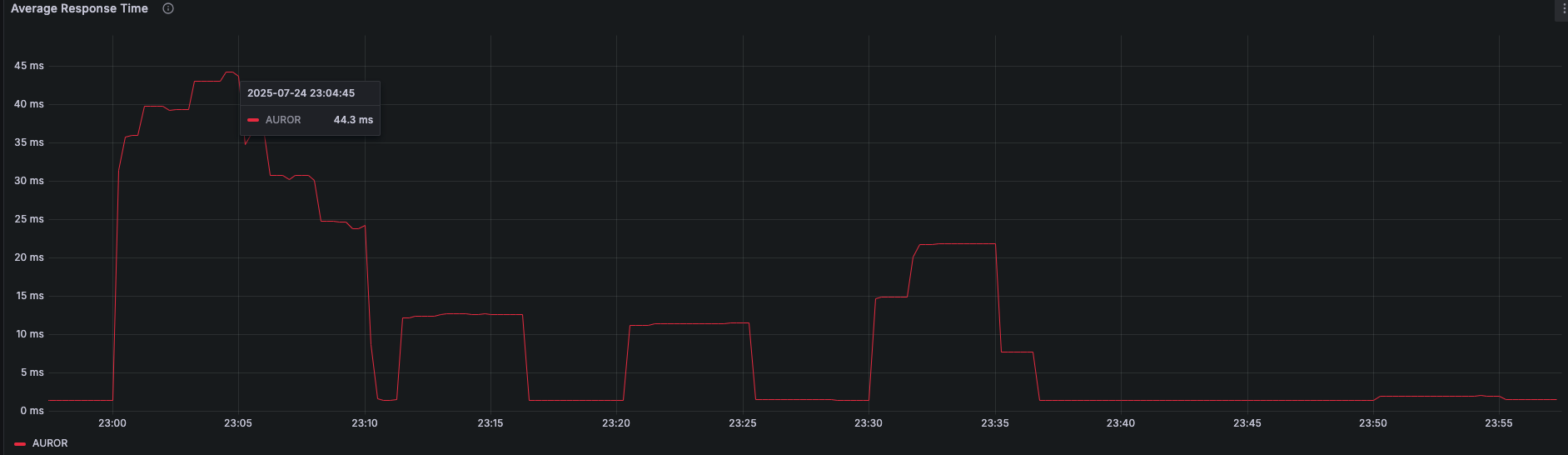

The only way to understand this is to talk about metrics directly. Average latency is shown below:

And the P95 graph which shows the worst case peaks where it needs to make a call to ECR:

The average response times dropped significantly due to our cache. We rarely observe peaks in the P95 graph due to calls to ECR. To mitigate those peaks we came up with two approaches:

- We plan to warm-up the cache of our webhook through mock requests coming from the officer (CLI client). As they are not API server requests, they will be used to cache the most frequently requested images.

- As Kubernetes nodes are rotated during routine cluster maintenance, the Auror pods are evicted and the cache is lost. This adds additional latency as we need to fill up the cache again. To mitigate this, we plan to write the caches into the disk periodically, so they will be used again when the node is restarted.

Conclusion:

In this post, we have shown how we went from a high-latency, prone-to-timeout, black-box third-party Image Admission Webhook to our rich with metrics, highly observable in-house solution. The result is faster image validation, zero cross-region timeouts since we deployed it into production, and full visibility into accepted, rejected, and external images via Prometheus metrics. With Auror in place, our clusters stay fast, reliable, and secure!